In response to several requests, I finally completed the very first blog post on data quality management. In this post, I’ve mainly “set the scene” with the intention of publishing several more in-depth blog posts related to specific areas of my interest within data quality management, including but not limited to AI-augmented data quality management.

As for now, this post is focused primarily on my personal opinion (experience-based) what influences the choice of the data quality management approach (i.e., smth very similar to what I talked about at HackCodeX Forum I posted about earlier, and where the photo comes from).

Tag data governance

The United Nations University EGOV’s repository platform and five of my articles it recommends 📖📚🧐

Recently, the United Nations University announced the launch of the United Nations University EGOV’s repository platform – a centralized hub of specialized repositories tackling global challenges, which is dedicated to two topics – EGOV for Emergencies that provides a set of content on innovations in digital governance for emergency response, and Data for EGOV is the repository intended “to supports policymakers, decision-makers, researchers, and the community interested in digitally transforming the public sector through emerging technologies and data. The repository combines diverse academic documents, use cases, rankings, best practices, standards, benchmarking, portals, datasets, and pilot projects to support open data, quality and purpose of open data, application of data techniques analytics in the public sector, and making cities smarter. This repository results from the “INOV.EGOV-Digital Governance Innovation for Inclusive, Resilient and Sustainable Societies” project on the role of open data and data science technologies in the digital transformation of State and Public Administration institutions“. The latter, recommends 286 reading materials (reports, articles, standards etc.) I find to be very relevant for the above described, and highly recommend to surf through. However, what made me specially happy while browsing this collection, is the fact that five of these reading materials are articles (co-)authored by me. Therefore, considering that not always I keep track of what I conducted in past, let me use this opportunity to reflect on those studies, in case you had not came across them previously, as well as to refresh mine memories (some of them dated back to times, when I worked on my PhD thesis).

By the way, every article is accompanied with tags that enrich keywords by which that article was described by authors, with a particular attention being paid to main topics, incl. “data analytics”, “smart city”, “open data”, “sustainability” etc., where for “the latter”sustainability”, tagging based on the compliance with a specific Sustainable Development Goal (SDG) takes place, thereby allowing to filter out relevant articles by a specific SDG or find out what SDG does your article contributes, where although while conducting research I kept in mind some of those I find my research more suited with, for one of them (the last one in the list) I was pretty surprised to see that it is very SDGs-compliant, being compliant with 11 SDGs (SDG-2, SDG-3, SDG-6, SDG-7, SDG-9, SDG-11, SDG-13, SDG-14, SDG-15).

So, back to those studies that the United Nations University recommends…

A multi-perspective knowledge-driven approach for analysis of the demand side of the Open Government Data portal, which proposes a multi-perspective approach where an OGD portal is analyzed from (1) citizens’ perspective, (2) users’ perspective, (3) experts’ perspective, and (4) state of the art. By considering these perspectives, we can define how to improve the portal in question by focusing on its demand side. In view of the complexity of the analysis, we look for ways to simplify it by reusing data and knowledge on the subject, thereby proposing a knowledge-driven analysis that supports the idea under OGD – their reuse. Latvian open data portal is used as an example demonstrating how this analysis should be carried out, validating the proposed approach at the same time. We are aiming to find (1) the level of the citizens’ awareness of the portal existence and its quality by means of the simple survey, (2) the key challenges that may negatively affect users’ experience identified in the course of the usability analysis carried out by both users and experts, (3) combine these results with those already known from the external sources. These data serve as an input, while the output is the assessment of the current situation allowing defining corrective actions. Since the debates on the Latvian OGD portal serving as the use-case appear more frequently, this study also brings significant benefit at national level.

Transparency of open data ecosystems in smart cities: Definition and assessment of the maturity of transparency in 22 smart cities, which focuses on the issue of the transparency maturity of open data ecosystems seen as the key for the development and maintenance of sustainable, citizen-centered, and socially resilient smart cities. This study inspects smart cities’ data portals and assesses their compliance with transparency requirements for open (government) data. The expert assessment of 34 portals representing 22 smart cities, with 36 features, allowed us to rank them and determine their level of transparency maturity according to four predefined levels of maturity – developing, defined, managed, and integrated. In addition, recommendations for identifying and improving the current maturity level and specific features have been provided. An open data ecosystem in the smart city context has been conceptualized, and its key components were determined. Our definition considers the components of the data-centric and data-driven infrastructure using the systems theory approach. We have defined five predominant types of current open data ecosystems based on prevailing data infrastructure components. The results of this study should contribute to the improvement of current data ecosystems and build sustainable, transparent, citizen-centered, and socially resilient open data-driven smart cities.

Smarter open government data for society 5.0: Are your open data smart enough? in which, considering the fact that the open (government) data initiative as well as users’ intent for open (government) data are changing continuously and today, in line with IoT and smart city trends, real-time data and sensor-generated data have higher interest for users that are considered to be one of the crucial drivers for the sustainable economy, and might have an impact on ICT innovation and become a creativity bridge in developing a new ecosystem in Industry 4.0 and Society 5.0, the paper examines 51 OGD portals on the presence of the relevant data and their suitability for further reuse, by analyzing their machine-readability, currency or frequency of updates, the ability to submit request/comment/complaint/suggestion and their visibility to other users, and the ability to assess the value of these data assessed by others, i.e., rating, reuse, comments, etc., which is usually considered to be a very time-consuming and complex task, and therefore rarely conducted. The analysis leads to the conclusion that although many OGD portals and data publishers are working hard to make open data a useful tool moving towards Industry 4.0 and Society 5.0, many portals do not even respect the principles of open data, such as machine-readability. Moreover, according to the lists of most competitive countries by topic, there are no leaders who provide their users with excellent data and service, therefore there is room for improvements for all portals. The paper shows that open data, particularly those published and updated in time, are provided in machine-readable format and support to their users, attract audience interest and are used to develop solutions that benefit the entire society (the case in France, Spain, Cyprus, the Netherlands, Taiwan, Austria, Switzerland, etc.). Thus, the publication of open data should be done not only because it is a modern trend, but also because it incentivizes scientists, researchers and enthusiasts to reuse the data by transforming it into knowledge and value, providing solutions, improving the world, and moving towards Society 5.0 or the super smart society.

Definition and evaluation of data quality: User-oriented data object-driven approach to data quality assessment proposes a data object-driven approach to data quality evaluation. This user-oriented solution is based on 3 main components: data object, data quality specification and the process of data quality measuring. These components are defined by 3 graphical DSLs, that are easy enough even for non-IT experts. The approach ensures data quality analysis depending on the use-case. Developed approach allows analysing quality of “third-party” data. The proposed solution is applied to open data sets. The result of approbation of the proposed approach demonstrated that open data have numerous data quality issues. There are also underlined common data quality problems detected not only in Latvian open data but also in open data of 3 European countries – Estonia, Norway, the United Kingdom. I.e., none of the very simple or intuitive and even obvious use cases in which the values of the primary parameters were analysed were satisfied by any Company Register. However, the Estonian and Norwegian Registers can be used to identify any company by its name and registration number, since only they have passed quality checks of the relevant fields.

Open Data Hackathon as a Tool for Increased Engagement of Generation Z: To Hack or Not to Hack? examines the role of open data hackathons, known as a form of civic innovation in which participants representing citizens can point out existing problems or social needs and propose a solution, in OGD initiative. Given the high social, technical, and economic potential of open government data (OGD), the concept of open data hackathons is becoming popular around the world. This concept has become popular in Latvia with the annual hackathons organised for a specific cluster of citizens – Generation Z. Contrary to the general opinion, the organizer suggests that the main goal of open data hackathons to raise an awareness of OGD has been achieved, and there has been a debate about the need to continue them. This study presents the latest findings on the role of open data hackathons and the benefits that they can bring to both the society, participants, and government. First, a systematic literature review is carried out to establish a knowledge base. Then, empirical research of 4 case studies of open data hackathons for Generation Z participants held between 2018 and 2021 in Latvia is conducted to understand which ideas dominated and what were the main results of these events for the OGD initiative. It demonstrates that, despite the widespread belief that young people are indifferent to current societal and natural problems, the ideas developed correspond to current situation and are aimed at solving them, revealing aspects for improvement in both the provision of data, infrastructure, culture, and government- related areas.

More to come, and let’s keep track of updates in this repository! Do not also to check other works in both the repository, as well as more work of mine you can find here.

Keynote at the 5th International Conference on Advanced Research Methods and Analytics (CARMA 2023)

June 28 I had the honor to participate in the opening of CARMA2023 – 5th International Conference on Advanced Research Methods and Analytics “Internet and Big Data in Economics and Social Sciences” delivering my keynote “Public data ecosystems in and for smart cities: how to make open / Big / smart / geo data ecosystems value-adding for SDG-compliant Smart Living and Society 5.0?” in the spectacular city of Sevilla, Spain 🇪🇸 🇪🇸 🇪🇸. What a honor to open the conference, immediately after the inaugural speech by organizers and sponsors, including representatives of Joint Research Center, European Commission (JRC), who even mentioned the topics I covered in my keynote (not limited to them, of course) as those that make this conference an event to attend and to learn from!!!

In this talk, as the title suggests, I:

- elaborated on the concepts of public /open data (incl. OGD), smart city and SDG and how are they related?

- introduced the concept of Society 5.0 and how is it related to open data?

- and finally, and more importantly, public/ open data ecosystem – what it is? what does it consist of?

I then dived into (1) data-related aspects of the public data ecosystem, i.e. what are the data-related prerequisites for a sustainable and resilient data ecosystem? (2) data portal / platforms as entry points and how to make it sufficiently attractive for the target audience? (3) stakeholder engagement – how to involve the target audience? what are the benefits of their involvement? and some more things.

Public data ecosystem part was built around our “Transparency of open data ecosystems in smart cities: Definition and assessment of the maturity of transparency in 22 smart cities“, with some references to other studies such us Transparency-by-design: What is the role of open data portals?, “Timeliness of Open Data in Open Government Data Portals Through Pandemic-related Data: A long data way from the publisher to the user“, “Open government data portal usability: A user-centred usability analysis of 41 open government data portals“, which were previously noticed by the Living Library that recommends studies they see as the “signal in the noise” and the Open Data Institute.

For the data, apart of almost “classical things”, I referred to the topic of “high-value datasets” and dived into a taxonomy we presented in “Towards High-Value Datasets determination for data-driven development: a systematic literature review” (also recommended by the Living Library as the “sound in the noise”), enriched by the results of my earlier study “Towards enrichment of the open government data: a stakeholder-centered determination of High-Value Data sets for Latvia” as well as results of two international workshops we organized.

The part on the public / open data, smart city, SDG and Society 5.0 and how they are interrelated was, in turn, based on our Chapter “The Role of Open Data in Transforming the Society to Society 5.0: A Resource or a Tool for SDG-Compliant Smart Living?”, which was called by FIT Academy “a groundbreaking research”.

And for the engagement, it mostly was about the workshops, datathons, hackathons, data competitions, as we as a co-creation and how the co-creation ecosystem occurs, what are the prerequisites for this etc., incl. referencing to “Open data hackathon as a tool for increased engagement of Generation Z: to hack or not to hack?” and “The Role of Open Government Data and Co-creation in Crisis Management: Initial Conceptual Propositions from the COVID-19 Pandemic“

CARMA is a forum for researchers and practitioners to exchange ideas and advances on how emerging research methods and sources are applied to different fields of social sciences as well as to discuss current and future challenges with main focus on the topics such as Internet and Big Data sources in economics and social sciences including Social media and public opinion mining, Web scraping, Google Trends and Search Engine data, Geospatial and mobile phone data, Open data and public data, Big Data methods in economics and social sciences such as Sentiment analysis, Internet econometrics, AI and Machine learning applications, Statistical learning, Information quality and assessment, Crowdsourcing, Natural Language processing, Explainability and interpretability, the applications of the above including but not limited to Politics and social media, Sustainability and development, Finance applications, Official statistics, Forecasting and nowcasting, Bibliometrics and sciencetometrics, Social and consumer behaviour, mobility patterns, eWOM and social media marketing, Labor market, Business analytics with social media, Advances in travel, tourism and leisure, Digital management, Marketing Intelligence analytics, Data governance, and Digital transition and global society, which, in turn, expects contributions in relation to Privacy and legal aspects, Electronic Government, Data Economy, Smart Cities, Industry adoption.

In addition to the regular sessions, poster session and two keynotes, a Special JRC session (EC) took place, during which Luca Barbaglia, Nestor Duch Brown, Matteo Sostero and Paolo Canfora presented projects they work on.

Great thanks goes to organizers and sponsors of CARMA2023 – Universidad de Sevilla, Cátedra Metropol Parasol, Cátedra Digitalización Empresarial, IBM, Universitat Politècnica de València, Joint Research Center – European Commission and Coca-Cola, who made this event a true success. Enjoyed this experience very much! Excellent venue! Great audience! ¡Muchas gracias!

References:

- Nikiforova, A., Flores, M. A. A., & Lytras, M. D. (2023). The role of open data in transforming the society to Society 5.0: a resource or a tool for SDG-compliant Smart Living? In Smart Cities and Digital Transformation: Empowering Communities, Limitless Innovation, Sustainable Development and the Next Generation. Emerald Publishing Limited.

- Lnenicka, M., Nikiforova, A., Luterek, M., Azeroual, O., Ukpabi, D., Valtenbergs, V., & Machova, R. (2022). Transparency of open data ecosystems in smart cities: Definition and assessment of the maturity of transparency in 22 smart cities. Sustainable Cities and Society, 82, 103906, https://doi.org/10.1016/j.scs.2022.103906

- Nikiforova, A. and McBride, K. 2021. Open government data portal usability: A user-centred usability analysis of 41 open government data portals. Telematics and Informatics 58,.

- Nikiforova, A. 2020. Timeliness of Open Data in Open Government Data Portals Through Pandemic-related Data: A long data way from the publisher to the user. 2020 Fourth International Conference on Multimedia Computing, Networking and Applications (MCNA), 131–138.

- Lnenicka, M. and Nikiforova, A. 2021. Transparency-by-design: What is the role of open data portals? Telematics and Informatics 61.

- Nikiforova, A., Rizun, N., Ciesielska, M., Alexopoulos, C., & Miletič, A. (2023). Towards High-Value Datasets determination for data-driven development: a systematic literature review.

- McBride, K., Nikiforova, A., Lnenicka, M. ‘The Role of Open Government Data and Co-creation in Crisis Management: Initial Conceptual Propositions from the COVID-19 Pandemic’. 1 Jan. 2023 : 219 – 238.

- Nikiforova, A. (2022). Open data hackathon as a tool for increased engagement of Generation Z: to hack or not to hack? In International Conference on Electronic Governance with Emerging Technologies (pp. 161-175). Cham: Springer Nature Switzerland.

- Nikiforova, A. (2022). Gen Z open data hackathon–civic innovation with digital natives: to hack or not to hack. In Proceedings of ongoing research, practitioners, workshops, posters, and projects of the international conference EGOV-CeDEM-ePart 202. Linkoping, Sweden (pp. 251-253).

- Nikiforova, A. (2021, October). Towards enrichment of the open government data: a stakeholder-centered determination of High-Value Data sets for Latvia. In Proceedings of the 14th International Conference on Theory and Practice of Electronic Governance (pp. 367-372).

📢🚨⚠️Paper alert! Overlooked aspects of data governance: workflow framework for enterprise data deduplication

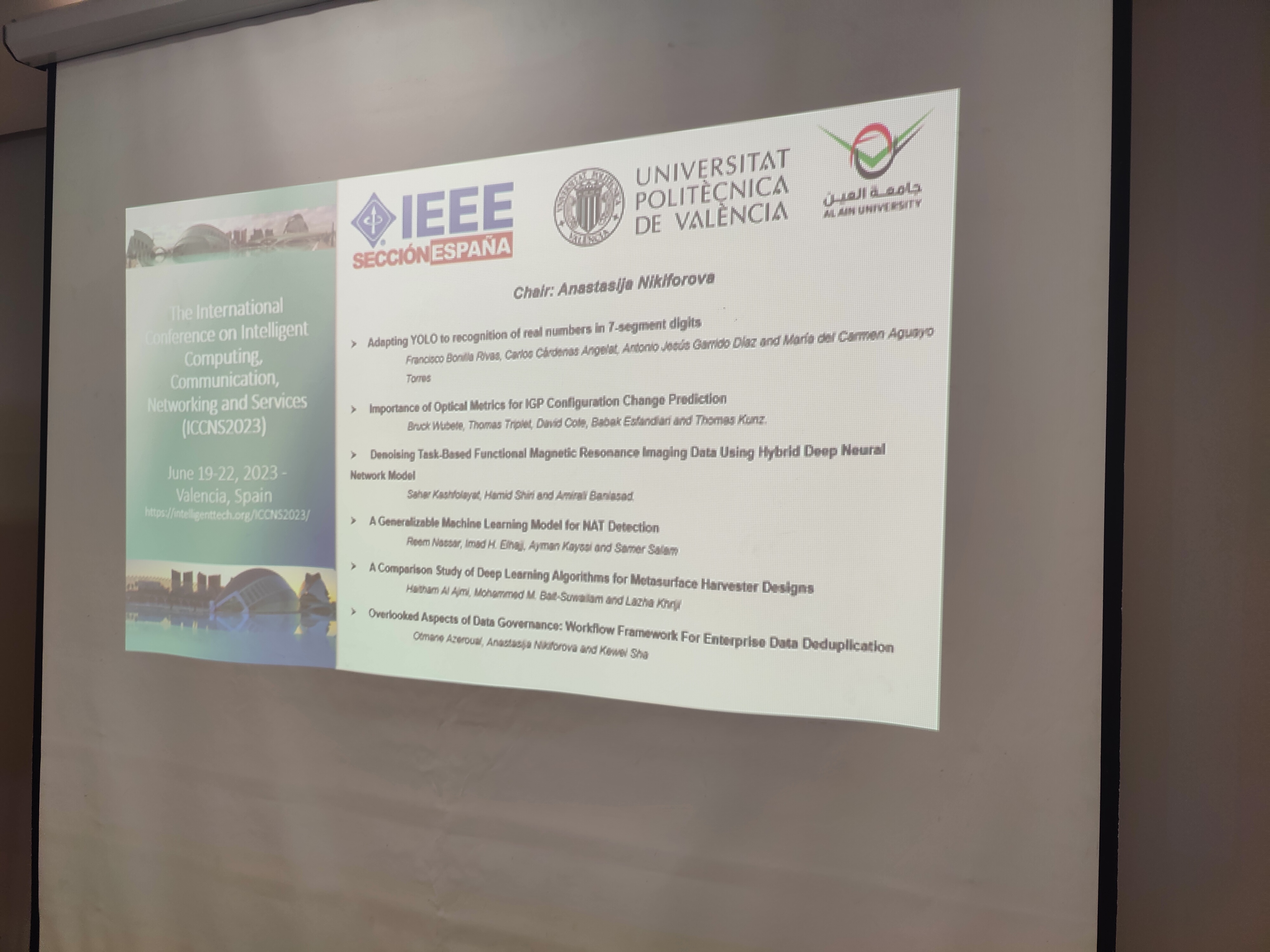

This time I would like to recommend for reading the new paper “Overlooked aspects of data governance: workflow framework for enterprise data deduplication” that has been just presented at the IEEE-sponsored International Conference on Intelligent Computing, Communication, Networking and Services (ICCNS2023). This “just”, btw, means June 19 – the day after my birthday, i.e. so I decided to start my new year with one more conference and paper & yes, this means that again, as many of those who congratulated me were wishing – to find the time for myself, reach work-life balance etc., is still something I have to try to achieve, but this time, I decided to give a preference to the career over my personal life (what a surprise, isn’t it?) 🙂 Moreover, this is the conference, where I am also considered to be part of Steering committee, Technical Program committee, as well as publicity chair. During the conference, I also acted as a session chair of its first session, what I consider to be a special honor – for me the session was very smooth, interactive and insightful, of course, beforehand its participants & authors and their studies, which allowed us to establish this fruitful discussion and get some insights for our further studies (yes, I also got one beforehand one very useful idea for further investigation). Thank you all contributors, with special thanks to Francisco Bonilla Rivas, Bruck Wubete, Reem Nassar, Haitham Al Ajmi.

And I am also proud with getting one of four keynotes for this conference – prof. Eirini Ntoutsi from the Bundeswehr University Munich (UniBw-M), Germany, who delivered a keynote “Bias and Discrimination in AI Systems: From Single-Identity Dimensions to Multi-Discrimination“, which I heard during one of previous conferences I attended and decided that it is “must” for our conference as well – super glad that Eirini accepted our invitation! Here, I will immediately mention that other keynotes were excellent as well – Giancarlo Fortino (University of Calabria, Italy), Dofe Jaya (Computer Engineering Department, California State University, Fullerton, California, USA), Sandra Sendra (Polytechnic University of Valencia, Spain).

The paper I presented is authored in a team of three – Otmane Azeroual, German Centre for Higher Education Research and Science Studies (DZHW), Germany, myself – Anastasija Nikiforova, Faculty of Science and Technology, Institute of Computer Science, University of Tartu, Estonia & Task Force “FAIR Metrics and Data Quality”, European Open Science Cloud & Kewei Sha, College of Science and Engineering University of Houston Clear Lake, USA – very international team. So, what is the paper about? It is (or should be) clear that data quality in companies is decisive and critical to the benefits their products and services can provide. However, in heterogeneous IT infrastructures where, e.g., different applications for Enterprise Resource Planning (ERP), Customer Relationship Management (CRM), product management, manufacturing, and marketing are used, duplicates, e.g., multiple entries for the same customer or product in a database or information system, occur. There can be several reasons for this (incl. but not limited due to the growing volume of data, incl. due to the adoption of cloud technologies, use of multiple different sources, the proliferation of connected personal and work devices in homes, stores, offices and supply chains), but the result of non-unique or duplicate records is a degraded data quality, which, in turn, ultimately leads to inaccurate analysis, poor, distorted or skewed decisions, distorted insights provided by Business Intelligence (BI) or machine learning (ML) algorithms, models, forecasts, and simulations, where the data form the input, and other data-driven activities such as service personalisation in terms of both their accuracy, trustworthiness and reliability, user acceptance / adoption and satisfaction, customer service, risk management, crisis management, as well as resource management (time, human, and fiscal), not to say about wasted resources, and employees, who are less likely trust the data and associated applications thereby affecting the company image. This, in turn, can lead to a failure of a project if not a business. At the same time, the amount of data that companies collect is growing exponentially, i.e., the volume of data is constantly increasing, making it difficult to effectively manage them. Thus, both ex-ante and ex-post deduplication mechanisms are critical in this context to ensure sufficient data quality and are usually integrated into a broader data governance approach. In this paper, we develop such a conceptual data governance framework for effective and efficient management of duplicate data, and improvement of data accuracy and consistency in medium to large data ecosystems. We present methods and recommendations for companies to deal with duplicate data in a meaningful way, while the presented framework is integrated into one of the most popular data quality tools – Data Cleaner.

In short, in this paper we:

- first, present methods for how companies can deal meaningfully with duplicate data. Initially, we focus on data profiling using several analysis methods applicable to different types of datasets, incl. analysis of different types of errors, structuring, harmonizing, & merging of duplicate data;

- second, we propose methods for reducing the number of comparisons and matching attribute values based on similarity (in medium to large databases). The focus is on easy integration and duplicate detection configuration so that the solution can be easily adapted to different users in companies without domain knowledge. These methods are domain-independent and can be transferred to other application contexts to evaluate the quality, structure, and content of duplicate / repetitive data;

- finally, we integrate the chosen methods into the framework of Hildebrandt et al. [ref 2]. We also explore some of the most common data quality tools in practice, into which we integrate this framework.

After that, we test and validate the framework. The final refined solution provides the basis for subsequent use. It consists of detecting and visualizing duplicates, presenting the identified redundancies to the user in a user-friendly manner to enable and facilitate their further elimination.

With this paper we aim to support research in data management and data governance by identifying duplicate data at the enterprise level and meeting today’s demands for increased connectivity / interconnectedness, data ubiquity, and multi-data sourcing. In addition, the proposed conceptual data governance framework aims to provide an overview of data quality, accuracy and consistency to help practitioners approach data governance in a structured manner.

In general, not only technological solutions are needed that would identify / detect poor quality data and allow their examination and correction, or would ensure their prevention by integrating some controls into the system design, striving for “data quality by design” [ref3, ref4], but also cultural changes related to data management and governance within the organization. These two perspectives form the basis of the wealth business data ecosystem. Thus, the presented framework describes the hierarchy of people who are allowed to view and share data, rules for data collection, data privacy, data security standards, and channels through which data can be collected. Ultimately, this framework will help users be more consistent in data collection and data quality for reliable and accurate results of data-driven actions and activities.

Sounds interesting? Read the paper -> here (to be cited as: Azeroual, O., Nikiforova, A., Sha, K. (2023, June). Overlooked aspects of data governance: workflow framework for enterprise data deduplication. In 2023 International Conference on Intelligent Computing, Communication, Networking and Services (ICCNS2023). IEEE (in print))

International Conference on Intelligent Computing, Communication, Networking and Services (ICCNS2023) is collocated with The International Conference on Multimedia Computing, Networking and Applications (MCNA2023), which are sponsored by IEEE (IEEE Espana Seccion), Universitat Politecnica de Valencia, Al ain University. Great thanks to the organizers – Jaime Lloret, Universitat Politècnica de València, Spain & Yaser Jararweh, Jordan University of Science and Technology, Jordan & Marios C. Angelides, Brunel University London, UK & Muhannad Quwaider, Jordan University of Science and Technology, Jordan.

References:

Azeroual, O., Nikiforova, A., Sha, K. (2023, June). Overlooked aspects of data governance: workflow framework for enterprise data deduplication. In 2023 International Conference on Intelligent Computing, Communication, Networking and Services (ICCNS2023). IEEE (in print).

Hildebrandt, K., Panse, F., Wilcke, N., & Ritter, N. (2017). Large-scale data pollution with Apache Spark. IEEE Transactions on Big Data, 6(2), 396-411

Guerra-García, C., Nikiforova, A., Jiménez, S., Perez-Gonzalez, H. G., Ramírez-Torres, M., & Ontañon-García, L. (2023). ISO/IEC 25012-based methodology for managing data quality requirements in the development of information systems: Towards Data Quality by Design. Data & Knowledge Engineering, 145, 102152.

Corrales, D. C., Ledezma, A., & Corrales, J. C. (2016). A systematic review of data quality issues in knowledge discovery tasks. Revista Ingenierías Universidad de Medellín, 15(28), 125-150.

HackCodeX Forum Keynote “Data Quality as a prerequisite for you business success: when should I start taking care of it?”

June 5, I was delighted to be invited to be a keynote at the HackCodeX Forum, delivering a keynote titled “Data Quality as a prerequisite for your business success: when should I start taking care of it?“ in my hometown – Riga, Latvia. HackCodeX Forum is a one-day event where international experts share their experience and knowledge about emerging technologies and areas such as Artificial Intelligence, Security, Data Quality, Quantum Computing, Sustainability, Open Data, Privacy, Ethics, Digital Services (with a keynote from CEO of SK ID Solutions – one of the solutions that make Estonia the #1 digital nation) etc. This time I was invited to cover the topic of Data Quality and I was happy to do so, especially considering the fact that the HackCodeX Forum is an event that closes one of the leading hackathons in Europe, which Riga was fascinated and passionated about, and this is evidenced by the rich list of advertisement we all saw in the last weeks and months (Delfi, Haker.lv, kripto.media, kursors.lv, labsoflatvia.lv to name just a few), which this year held in Latvia and brought together around 500 developers, designers and entrepreneurs to create and innovate, solving 5 challenges of this year:

- 🏆 ATEA challenge: Minimise manual work and drive data-powered decision-making

- 🏆 Emergn challenge: Improve the quality of life for people with disabilities

- 🏆 UI.COM & Riga TechGirls challenge: Help shoppers make more sustainable purchasing decisions

- 🏆 Game Changer Audio (GCA) challenge: Identify each individual note by listening to notes being played real-time

- 🏆 Ministry of Education and Science challenge: Help make education hackable again!

Form me, in turn, yet another audience, yet another experience.

In short, in this Star Wars-style presentation (yes, I am a fan, and given the number of DQ memes in this style, I am not an exception and cannot say that I am a geek or a weird person, but rather a normal DQ/IT person), I urged “help R2D2 save the galaxy!“.

Images from: History in Objects: Death Star Plans Datacard • Lucasfilm, Video Analysis of an Exploding Death Star | WIRED, Post | LinkedIn, Destruction of Despayre | Wookieepedia | Fandom. Special thanks to George Firican for the idea and inspiration!

In a bit more detail, I elaborated on the importance and the relevance of the data quality regardless of the age of this topic [that is older than me], data quality management and the factors the DQM approach depends on. The popularity and importance of the topic is undoubtfully due to the amount of the data we are dealing with and the fact that we are living in the data-driven world, where data are everywhere – they are generated continuously, by multiple sources, which is not only about our devices, or sensors, but also about ourselves (however, with the help of the two above). This led to the fact that some time ago data have been claimed to be a new oil. Have you heard this? I am sure you were. But have you thought about this statement? is it true? false? something in between? Bingo! While there are commonalities between data and oil, they are rather small in number. One interesting reading devoted to this comes form Forbes. I.e. they admit that both artifacts – oil and data – can be seen as similar since both are “power”, including being the power of those, who own them. In other words, they compare data owners such as Alibaba, Google, Twitter, Facebook etc. to oil barons (100 years back from now). But, otherwise, more in-depth comparative analysis reveal mostly differences. To name just a few:

💡 oil is a finite resource, while data are not. Instead, data are effectively infinitely durable and reusable and treating them like oil, i.e. storing in siloes, reduces their value, usefulness and potential as whole;

💡another difference is in transportation, where oil requires huge amounts of resources to be transported to where and when it is needed, while for the data – they can be replicated indefinitely and moved around the world at very high speeds and, more importantly, at very low costs;

💡 Yet another difference lies in the usability of both – oil and data – when they have been already used once. While for the oil, when it is used, its energy is being lost (as heat or light), or permanently converted into another form such as plastic, data usefulness, in contrast, tend to increase with their actual usage, i.e. new uses arose, data are turned into training data at the very end etc.;

💡 as the world’s oil reserves dwindle, extracting it become increasingly difficult and expensive, while for the data – they are becoming increasingly available, incl. but not limited due to the technology advances as well as due to a high number and amount of data producers;

💡 and the last but not the least, oil drilling involves causing damage to the natural environment and exploitation of finite natural resources, while data mining doesn’t – at least there is no intrinsic damage to the environment and exploitation of finite natural resources. Of course, here we do not mention (but should not forget about) the electricity used to run the system and relatively low tendency of green computing (aka sustainable computing) for their further processing.

Thus, as Forbes suggests, if we want to talk about the data as a power source or fuel, it make much more sense to compare them with renewable sources 🌎🌎🌎 such as the sun ☀️, wind 💨 and tides 🌊. All in all, data can be seen to be more than oil. Hence the popularity and importance of the data quality topic.

The factors that can affect the DQM approach, in turn, can be different, starting with those implying from the relative nature of the data quality as a phenomenon, i.e., the definition, variety of (and non-ambiguity of) data quality dimensions, to which the data quality metrics are expected to be selected, DQ dynamism, dependence on the user and use-case etc. (some of the above are discussed in “Towards a data quality framework for EOSC“ and “Definition and Evaluation of Data Quality: a user-oriented data object-driven approach to data quality assessment”), as well as the data artifact whose quality is under analysis. In other words, is this about the data object or dataset? Database? Data repository? Information system?

If it is a data object, the next “level” of factors is data owner – known or unknown (third-party data such as open data), and their structure – structured, semi-structured, unstructured data?

While for the Information Systems / Software, I find that “think data quality first” and “data quality by design” are two mantras to be kept in mind. The later, however, is something we have studied together with my colleagues from Mexico , coming up with this modification of “quality by design” principle into “data quality by design”. I reported on the respective study before – “ISO/IEC 25012-based methodology for managing data quality requirements in the development of information systems: Towards data quality by design” (read here), where we proposed DAQUAVORD – a Methodology for Project Management of Data Quality Requirements Specification, which is based on the Viewpoint-Oriented Requirements Definition (VORD) method, and the latest and most generally accepted ISO/IEC 25012 standard, whose main idea was to start thinking of data quality as soon as the development of the system start to make sure that some data quality level is ensured by the design, i.e. transformed into both functional and non-functional requirements.

Alternatively, it can be done not necessarily before, but also during the development or even when the system is already in production. Some solutions exist here, but I typically use the opportunity to self-advertise previous projects and studies that I worked on, especially this one since it was based on the results of my PhD thesis, which is summarized “Definition and Evaluation of Data Quality: a user-oriented data object-driven approach to data quality assessment”, namely, Data Quality Model-based testing approach (DQMBT) for testing information systems that uses the data object-driven data quality model as a testing model, which was presented in the context of e-scooter system and Insurance System. Both, however, are rather ad-hoc approaches, whose main value lies in the conceptual idea, not the implementation, at least at this point.

For the repository, in turn, whether it is about the data warehouse, data lake? Or maybe even data lakehouse? For the later two, metadata and data governance become “must” to avoid GIGO (garbage in – garbage out effect) and turning the data lake into a data swamp, which is slightly addressed in “Combining data lake an data wrangling for ensuring data quality in CRIS“, incl. but not limited elaborating on why data wrangling should be given the preference over data cleaning.

The importance of both metadata and data governance was then emphasized, where for the later, the support from Elon Musk has been asked 😀 He was rather mentioned to support the speculations of data governance importance, which was once mentioned by him as a key to improve the product you are delivering, and I just wanted to make my words a bit more authoritative, i.e. he is seen to be more or less successful businessman, isn’t he? 😀

You can find slides here or watch the video 👇

Big thanks to both the organizers – Helve, and supporters, who made both the hackathon and the forum a success. More precisely, Techchill, techhub, Lift 99, #RigaTechGirls, justjoin.it, Oradea.Tech.Hub, RTU design Factory, Startup Lithuania, Kaunas Technology University, Stratup Estonia, Spring Hub. kood / Johvi, Technopol, Enterprise Forum CEE, Slush, Aaltos, AWS (Amazon Web Services), Google for Startups, Junction, Bird Incubator, EdTech Estonia. Sphere,it, Codecamp, Nine brains, Draper Startup House, Eiropas Digitālās inovācijas centrs, 28Stone.

And some more very special actors of the community, who were in the core of this hackathon edition – Emergn, Izglītības un zinātnes ministrija (Ministry of Education and Science), EPAM Systems Latvia, Atea Global Services Ltd., Ubiquiti Inc. & RigaTechGirls, Investment and Development Agency of Latvia (LIAA).